Ordered embeddings and intrinsic dimensionalities with information-ordered bottlenecks

Abstract

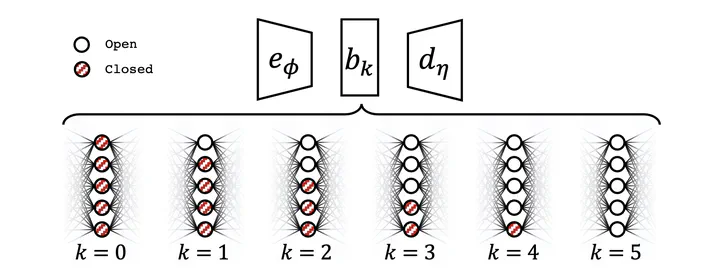

We present the information-ordered bottleneck (IOB), a neural layer designed to adaptively compress data into latent variables ordered by likelihood maximization. Without retraining, IOB nodes can be truncated at any bottleneck width, capturing the most crucial information in the first latent variables. Unifying several prior approaches, we demonstrate that IOB models achieve efficient compression of essential information for a given encoding architecture, while also assigning a semantically meaningful ordering to latent representations. IOBs demonstrate a remarkable ability to compress embeddings of high-dimensional image and text data, leveraging the performance of SOTA architectures such as CNNs, transformers, and diffusion models. Moreover, we introduce a novel theory for estimating global intrinsic dimensionality with IOBs and show that they recover SOTA dimensionality estimates for complex synthetic data. Furthermore, we showcase the utility of these models for exploratory analysis through applications on heterogeneous datasets, enabling computer-aided discovery of dataset complexity.

Type

Publication

Machine Learning: Science and Technology

Add the full text or supplementary notes for the publication here using Markdown formatting.